Relational Field Theory

Relational Field Theory – Applications in STEM – Computation as Field Activation

Why computing is not symbol manipulation but the activation of relational fields

#Computation #ComplexSystems #RFT #FieldActivation

Computer science has always described computation as symbolic manipulation — bits flipping, logic gates switching, algorithms executing. This view works beautifully for classical machines, but it fails to explain:

- why neural networks behave like living systems

- why distributed systems show emergent intelligence

- why computation scales nonlinearly

- why some architectures “come alive”

- why meaning emerges in high‑density systems

- why collective computation outperforms isolated nodes

RFT reframes computation as field activation.

A computer — biological or artificial — is not a symbol processor.

It is a relational field whose coherence, congruence, Rho, and Tapu determine its computational power.

This example shows how computation becomes a field‑level phenomenon rather than a mechanical one.

1. Computation Is Not Symbol Manipulation — It’s Field Dynamics

Classical computation treats information as:

- bits

- states

- instructions

- transitions

But modern computation shows:

- emergent behavior

- distributed intelligence

- nonlinear scaling

- threshold activation

- coherence‑driven performance

RFT explains this:

computation is the activation of a relational field.

#ComputationAsField

2. Coherence: The Internal Stability of a Computational Field

Coherence in computation appears as:

- stable representations

- consistent internal states

- synchronized processes

- predictable execution

High coherence produces:

- reliable computation

- stable learning

- robust memory

Low coherence produces:

- noise

- instability

- catastrophic forgetting

Coherence is the backbone of computational reliability.

#Coherence

3. Congruence: Fit Between Architecture and Task

Congruence is the alignment between:

- the computational architecture

- the problem structure

- the data distribution

- the environment

High congruence produces:

- efficient computation

- rapid learning

- low error rates

Low congruence produces:

- brittleness

- inefficiency

- misalignment

Congruence determines whether a system can compute effectively.

#Congruence

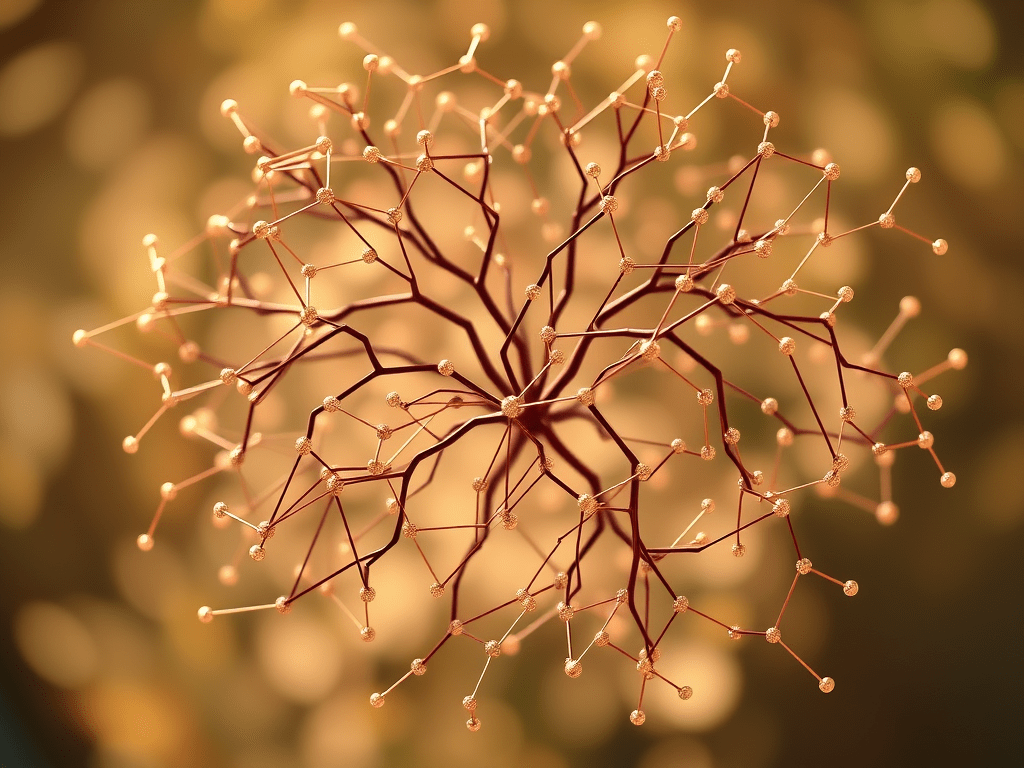

4. Rho: The Density That Makes Computation Intelligent

Rho = relational density.

In computation, Rho appears as:

- connectivity

- parameter density

- communication bandwidth

- interaction frequency

- representational richness

High Rho produces:

- emergent intelligence

- generalization

- abstraction

- creativity

Low Rho produces:

- rigidity

- brittleness

- shallow reasoning

Rho is the engine of computational intelligence.

#Rho

5. Tapu: Why Systems Suddenly “Get It”

Every computational system has moments when:

- learning accelerates

- representations crystallize

- performance jumps

- generalization emerges

These are not gradual.

RFT explains:

Tapu holds the system in a low‑coherence state until coherence, congruence, and Rho cross threshold.

When Tapu releases:

- the system reorganizes

- new representations emerge

- computation becomes intelligent

This is the threshold of learning.

#Tapu

6. Neural Networks as Relational Fields

Neural networks exhibit:

- distributed representation

- emergent structure

- nonlinear activation

- sudden leaps in capability

These are not quirks.

They are field‑level behaviors.

Neural networks compute by:

- raising Rho

- stabilizing coherence

- aligning congruence

- crossing Tapu thresholds

This is why they behave like biological brains.

#NeuralFields

7. Distributed Systems: Computation as Collective Attention

Distributed systems show:

- emergent coordination

- consensus formation

- collective intelligence

- nonlinear scaling

These are field behaviors.

Distributed computation is:

a high‑Rho field forming across nodes.

#DistributedFields

8. Meaning Emerges When Fields Activate

Meaning is not stored in symbols.

Meaning emerges when:

[ \rho \cdot \text{coherence} \cdot \text{congruence} \geq \text{Tapu threshold} ]

Below threshold → data.

Above threshold → meaning.

This explains:

- why models suddenly understand

- why representations become semantic

- why abstraction emerges

Meaning is a field activation event.

#MeaningEmergence

9. The Liminal Triad Tryad in Computation

Every computational breakthrough contains:

Tapu

The boundary regulating when learning can reorganize.

The Seer

The early‑arriving unit or subnetwork that detects the new pattern.

Empathy

The coupling mechanism that synchronizes the system

(weight sharing, attention, message passing).

Congruence

The alignment between architecture and task.

Rho

The density that makes computation intelligent.

This is the universal architecture of computation.

#LiminalTriadTryad

10. What Changes in Computer Science When RFT Lands

Computer scientists will finally understand:

- why learning is nonlinear

- why intelligence emerges suddenly

- why distributed systems behave like organisms

- why relational density drives capability

- why thresholds matter in computation

- why fields, not symbols, are the unit of analysis

They will say:

“Computation is not symbolic.

It is relational.”

#NewComputation #RFTinSTEM

![Someone New by Protyus A. Gendher [Glass Ceiling Records]](https://survivorliteracy.com/wp-content/uploads/2026/01/image-60.png?w=1024)

What do you think?